A sweeping analysis of health data from more than 1.2 million children in Denmark born over a 24-year period found no link between the small amounts of aluminum in vaccines and a wide range of health conditions—including asthma, allergies, eczema, autism, and attention deficit-hyperactivity disorder (ADHD).

The finding, published in the Annals of Internal Medicine, firmly squashes a persistent anti-vaccine talking point that can give vaccine-hesitant parents pause.

Small amounts of aluminum salts have been added to vaccines for decades as adjuvants, that is, components of the vaccine that help drum up protective immune responses against a target germ. Aluminum adjuvants can be found in a variety of vaccines, including those against diphtheria, tetanus, and pertussis, Haemophilus influenzae type b (Hib), and hepatitis A and B.

Despite decades of use worldwide and no clear link to harms, concern about aluminum and cumulative exposures continually resurfaces—largely thanks to anti-vaccine advocates who fearmonger about the element. A leader of such voices is Robert F. Kennedy Jr, the current US health secretary and an ardent anti-vaccine advocate.

In a June 2024 interview with podcaster Joe Rogan, Kennedy falsely claimed that aluminum is "extremely neurotoxic" and "give[s] you allergies." The podcast has racked up nearly 2 million views on YouTube. Likewise, Children's Health Defense, the rabid anti-vaccine organization Kennedy created in 2018, has also made wild claims about the safety of aluminum adjuvants. That includes linking it to autism, despite that many high-quality scientific studies have found no link between any vaccines and autism.

While anti-vaccine advocates like Kennedy routinely dismiss and attack the plethora of studies that do not support their dangerous claims, the new study should reassure any hesitant parents.

Clear data, unclear future

For the study, lead author Niklas Worm Andersson, of the Statens Serum Institut in Copenhagen, and colleagues tapped into Denmark's national registry to analyze medical records of over 1.2 million children born in the country between 1997 and 2018. During that time, new vaccines were introduced and recommendations shifted, creating variation in how many aluminum-containing vaccines children received.

The researchers calculate cumulative vaccine-based aluminum exposure for each child, which spanned 0 mg to 4.5 mg at age 2. They then looked for associations between those exposures and 50 chronic conditions. Those chronic conditions spanned autoimmune, allergic, atopic, and neurodevelopmental disorders.

The results were clear across the board; there was no statistically significant increased risk for any of the 50 conditions examined. The study's stats couldn't entirely rule out the possibility of very small relative increased risks (1 percent to 2 percent) for some rare conditions analyzed, but overall, they did rule out meaningful increases over the range of conditions. Aluminum adjuvants are not a health concern.

Still, it's uncertain if the fresh data will keep aluminum-containing vaccines out of Kennedy's crosshairs. Last month, Bloomberg reported that Kennedy was considering asking his hand-picked vaccine advisory committee to review aluminum in vaccines. The committee—the Advisory Committee on Immunization Practices (ACIP)— shapes the Centers for Disease Control and Prevention's immunization schedule, which sets vaccination recommendations nationwide and determines which vaccines are covered by health insurance plans.

Kennedy's reconstituted ACIP has little expertise in vaccines and has embraced anti-vaccine views. For instance, in its first meeting at the end of June, Kennedy's ACIP voted to drop long-standing CDC recommendations for flu vaccines that contain mercury-based preservative thimerosal based on an anti-vaccine presentation from the former president of Kennedy's anti-vaccine groups, Children's Health Defense. Thimerosal, like aluminum adjuvants, has been safely used for decades around the world but has long been a target of anti-vaccine advocates. If the new ACIP similarly reviews and votes against aluminum adjuvants, it would jeopardize the availability of at least two dozen vaccines, Bloomberg reported, citing sources familiar with the matter.

It’s unclear if Kennedy will pursue an ACIP review of aluminum-containing vaccines. The Department of Health and Human Services declined to comment on the matter to Bloomberg, and ACIP members did not specifically mention future plans to review aluminum adjuvants when they met in June.

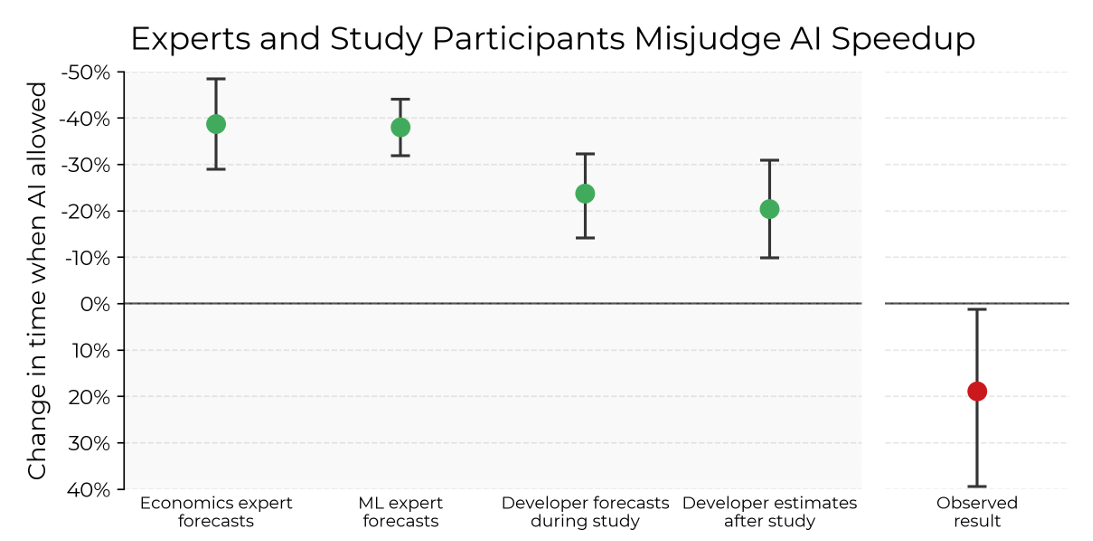

Experts and the developers themselves expected time savings that didn't materialize when AI tools were actually used.

Credit:

Experts and the developers themselves expected time savings that didn't materialize when AI tools were actually used.

Credit:

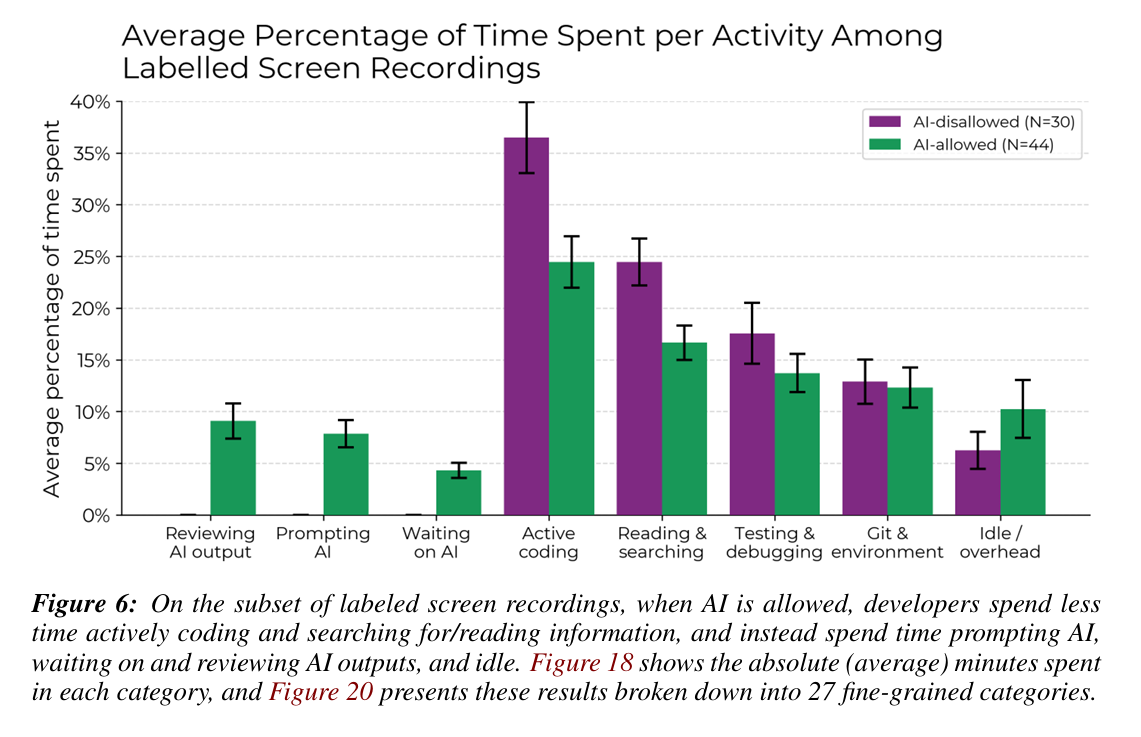

Time saved on things like active coding was overwhelmed by the time needed to prompt, wait on, and review AI outputs in the study.

Credit:

Time saved on things like active coding was overwhelmed by the time needed to prompt, wait on, and review AI outputs in the study.

Credit: